Part 2 of Augmented Synthetic Control: Cargo Cult Science. This time, we build a synthetic California and discuss its validity. Plus a look back at synthetic Connecticut.

Making your own control group when there is none: synthetic controls and cargo cult science.

This is a continuation of yesterday's post. Yesterday was the pre-req's on synthetic controls and today we start into the meat of the topic.

If you're shaky on the basics of science, and didn't already, go read yesterday's post. If you step into today's work and feel like you fell in the deep end, go back and read yesterday's post.

This is tough and may stretch you, but it's important. Stay with it. Read the post once, let it marinate, and read it again. Put questions in the comments.

Knowing about synthetic controls helps you join the conversation when you hear policymakers parroting studies they got from advocates. It helps you be a more knowledgeable citizen and advocate.

I will use the same video I did yesterday as a reference. It's linked first below. The gentleman in the video, in turn, uses a pretty well known paper analyzing the effectiveness of a 1988 California smoking-cessation measure (Prop 99). I found the paper and linked to it second for reference.

A natural approach to gauging effectiveness of Prop 99 would compare cigarette sales before the passage of the law and then after. We'd also want to them compare them (before and after) with places that didn't have Prop 99 as law.

The issue with that might be that there are many other things to confound the measurement. Other states without Prop 99, even the population of California pre- and post Prop 99 are, well, different.

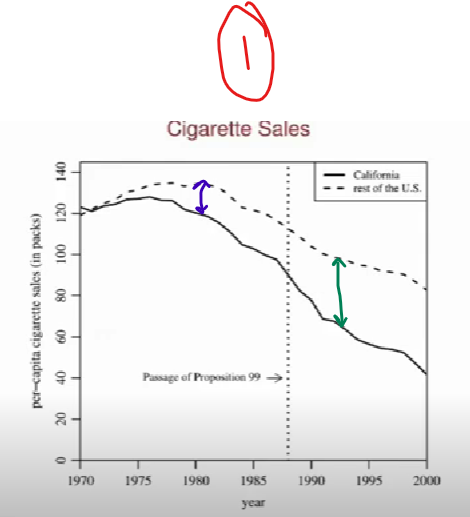

The only way to have done this would have been to ask God to make us two Californias and then we'd randomly pick one to have the law and the other to not. Absent that, you run into a problem like you can see in screenshot 1.

Cigarette sales were falling in California and nationwide prior to the passage of Prop 99, as the blue arrow illustrates. That calls into question whether any decrease below national average after the passage of Prop 99 (the green arrow) is real.

Without God's cooperation on that second California, the researchers here decided to make themselves a control state, a "Synthetic California" (SCA).

Here's how. Look at screenshot 2.

The researchers picked 5 characteristics (boxed in red, e.g. beer consumption per capita). I want you to note that the last three rows are cigarette sales per capita for three different times.

They had a computer look at a table of these same characteristics for the remaining 49 states. The computer picked from the list of states a mix of 38 states and mixed them individually in different proportions until the SCA matched real California on these 5 characteristics.

You might wonder why the researchers chose three different years to match the smoking rate. Why not match it for all time? I'm not 100%, but my guess is that any attempt to try and match the smoking rate for all time would have made making a SCA impossible.

Screenshot 3 shows the results of these efforts. Note the lack of a blue gap. SCA is, as it was designed to be, a perfect match to real California, but only going backwards.

The researchers then looked at where SCA sat vs. real California post-Prop 99 and claim that this gap (here in green) is the actual definitive causal effect of Prop 99. Their claim is that the gap shows that Prop 99 decreased smoking.

But this claim is technically only valid in Synthetic California. Trying to extend that to reality is another matter. Synthetic controls are, in fact, problematic for a number of reasons. Conclusions drawn from them are approximate at best. I won't go into a full treatment but will instead confine my concerns to the basics of science I outlined yesterday.

First, choosing your own control group invites a whole series of questions. Picking anything at all calls into question the results because it's not random. Picking any small (and finite) set of characteristics necessarily leaves others out.

That being the case, how on earth (as with the seed example yesterday) can you say that what you see is a real effect based solely on what you're studying?

Choosing a synthetic control means you have to pick a certain list of things to ask your computer to match. What do you pick? Why? Who is to say that what you pick are the best (or a complete list of) characteristics to watch? What about missing variables: population/demographic/cultural differences that are tougher to ask a computer to fit?

Second, by fitting your model back to your data prior to Prop 99, you assure that your model works BACKWARD**, but will it work going forward? Is it predictive? Repeatable?**

Remember back to yesterday. A key tenet of science is that your results need to be repeatable and reproducible. You should get the same results from multiple trials and other researchers should get the same results if they follow your procedure.

What if my computer algorithm matched and weighted which states go in the control group slightly differently so my Synthetic CA, even with the same 5 characteristics, didn't quite match theirs? Would I get the same results? Would our results match on every trial? Would they match 10 years into the future? 15?

In post 1 on this topic, I called Synthetic Controls "Cargo Cult Science": something that looks like real science with all the appropriate parts and so on, but that is not actual science. I stand by that. I highly question the validity of any study that uses a synthetic control. So should you.

Researchers are trying with synthetic controls to establish something that is not possible with their research tools. They're trying to get cause and effect from statistics.

I don't care how technical it sounds, I don't care about the vocabulary, I don't care how sophisticated the computer programming is, this sort of thing fails on the fundamentals of science (the things I mention above among others). When you knock the foundation out of a building, it can't stand no matter how carefully designed above ground.

Thus with synthetic controls and conclusions drawn from them.

If you'd like more on how his relates specifically to guns and gun policy studies, start at about the 6:43 mark in the third link below.

**This was a similar criticism leveled (and with good reason) at our own state's CDPHE COVID modeling. It worked backward but not forward. And wouldn't you just know it, the model sure seemed to bolster the Polis Administrations claims about what they needed to do in the future or how successful they were in the past.

https://www.tandfonline.com/doi/abs/10.1198/jasa.2009.ap08746

https://www.google.com/url?q=https://www.youtube.com/watch?v%3DPgiQ-LmJGMY%26t%3D807s&sa=D&source=calendar&usd=2&usg=AOvVaw27Ta8fNWRc67ZprTeEuNIo

Related:

A couple of (older) Reason videos on the "science" of saying whether gun control measures have any effect.

The short answer on nearly every question like this, in my opinion, is we don't know and I'm skeptical as to whether we ever will.

Nonetheless, for researchers that want to publish something on the matter (and remember that publishing is the coin of the realm for academics), things like synthetic controls are often used.

As an example, at about the 6:43 mark in the first video below, you will find a discussion of a "Synthetic Connecticut" used in one study and its validity.